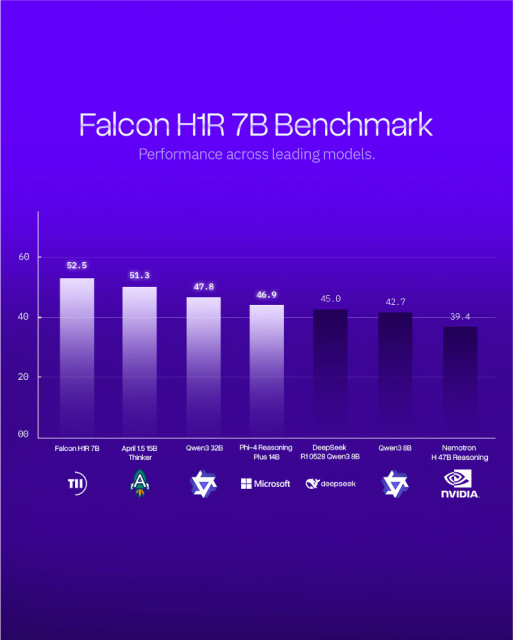

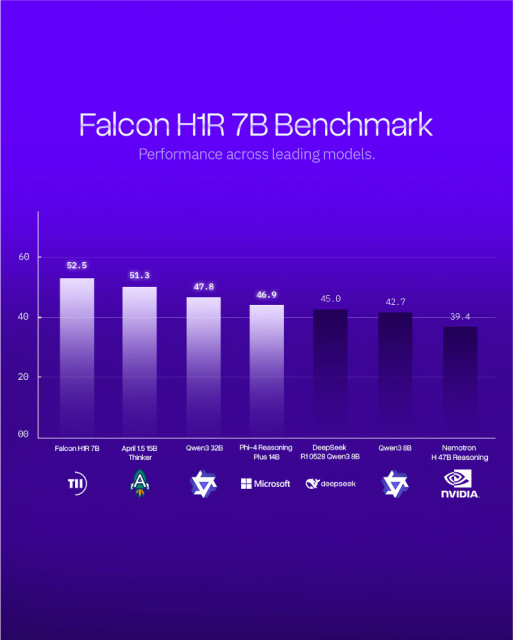

TII Launches Falcon Reasoning: Best 7B AI Model Globally, Also Outperforms Larger Models (Graphic: AETOSWire)

TII Launches Falcon Reasoning: Best 7B AI Model Globally, Also Outperforms Larger Models (Graphic: AETOSWire)

The Technology Innovation Institute (TII), the applied research pillar of Abu Dhabi’s Advanced Technology Research Council (ATRC), has announced the release of Falcon H1R 7B, a next-generation AI model that takes a significant step toward making advanced AI more accessible than ever, by delivering world-class reasoning performance in a compact, efficient, and openly available format.

With just 7 billion parameters, Falcon H1R 7B challenges and, in many cases, outperforms larger open-source AI models from around the world, including models from Microsoft (Phi 4 Reasoning Plus 14B), Alibaba (Qwen3 32B), and NVIDIA (Nemotron H 47B). This model release reaffirms TII’s position at the forefront of efficient AI innovation and reinforces the UAE’s growing influence in global technology leadership.

His Excellency Faisal al Bannai, Adviser to the UAE President and Secretary General of the Advanced Technology Research Council, said, “Falcon H1R reflects the UAE’s commitment to building open and responsible AI that delivers real national and global value. By bringing world-class reasoning into a compact, efficient model, we are expanding access to advanced AI in a way that supports economic growth, research leadership, and long-term technological resilience.”

A Breakthrough in Test-Time Reasoning

Falcon H1R 7B builds on the Falcon H1-7B foundation with a specialized training approach and a hybrid Transformer-Mamba architecture that improves both accuracy and speed.

“Falcon H1R 7B marks a leap forward in the reasoning capabilities of compact AI systems,” said Dr Najwa Aaraj, CEO of TII. “It achieves near-perfect scores on elite benchmarks while keeping memory and energy use exceptionally low, critical criteria for real-world deployment and sustainability.”

This approach unlocks what researchers call “latent intelligence,” enabling Falcon H1R 7B to reason more effectively and efficiently. The model sets a new Pareto frontier, a performance sweet spot where increasing speed doesn’t mean sacrificing quality.

Benchmarking the Best

In competitive benchmarks, Falcon H1R 7B delivered standout results:

·Math: Achieved 88.1% on AIME-24, outperforming ServiceNow AI’s Apriel 1.5 (15B) (86.2%) - proving that a compact 7B model can rival or exceed much larger systems.

·Code and Agentic Tasks: Delivered 68.6% accuracy, a best-in-class performance among models under 8B, and scored higher in LCB v6, SciCode Sub and TB Hard benchmarks, with Falcon H1R scoring 34% compared to China’s DeepSeek R1-0528 Qwen 3 8B (26.9%) and even surpassing larger contenders like Qwen3-32B (33.4%).

·General Reasoning: Demonstrated strong logic and instruction-following abilities, matching or approaching the performance of larger models like Microsoft’s Phi 4 Reasoning Plus (14B), while using only half the parameters.

·Efficiency: Achieved up to 1,500 tokens/sec/GPU at batch 64, nearly doubling the speed of China’s Qwen3-8B, thanks to its hybrid Transformer-Mamba architecture - delivering faster, scalable performance without sacrificing accuracy.

“This model is the result of world-class research and engineering. It shows how scientific precision and scalable design can go hand in hand,” said Dr Hakim Hacid, Chief Researcher at TII’s Artificial Intelligence and Digital Research Centre. “We are proud to deliver a model that enables the community to build smarter, faster, and more accessible AI systems.”

Open Source and Community Driven

In line with TII’s commitment to AI transparency and collaboration, Falcon H1R 7B is released as an open-source model under the Falcon TII License. Developers, researchers, and institutions worldwide can access the model via Hugging Face, along with a full technical report detailing the training strategies and performance on key reasoning benchmarks.

This new release builds on the global success of TII’s Falcon program. Since their debut, Falcon models have consistently ranked among the world’s top-performing AI systems, with the first four generations achieving number-one global rankings in their respective categories. Across successive iterations, Falcon has set new benchmarks in performance, efficiency, and real-world deployability, demonstrating that compact, sovereign models can outperform significantly larger systems. These milestones underscore Abu Dhabi and the wider UAE’s growing leadership in frontier AI and TII’s ability to deliver globally competitive research.

.

제12회 인터내셔널 슈퍼퀸 모델 콘테스트·제7회 월드 슈퍼퀸 한복 모델 결선대회 성료

제12회 인터내셔널 슈퍼퀸 모델 콘테스트 및 제7회 월드 슈퍼퀸 한복 모델 결선대회가 지난 10일과 11일 서울 송파구에 위치한 호텔 파크하비오 그랜드볼룸에서 이틀간 연이어 성대하게 개최되며 대한민국 대표 모델 대회로서의 위상을 다시 한번 입증했다. 인터내셔널 슈퍼퀸 모델협회(회장 김대한, 대표 박은숙)가 주최·주관한 두 행사는 화려한 드레스 무대와 전통 한복 런웨이를 하나의 브랜드 가치로 아우른 대규모 모델 페스티벌로, 모델을 꿈꾸는 이들에게 ‘반드시 도전하고 싶은 무대’로 평가받는 슈퍼퀸의 저력을 여실히 보여줬다. 두 대회 모두

제12회 인터내셔널 슈퍼퀸 모델 콘테스트·제7회 월드 슈퍼퀸 한복 모델 결선대회 성료

제12회 인터내셔널 슈퍼퀸 모델 콘테스트 및 제7회 월드 슈퍼퀸 한복 모델 결선대회가 지난 10일과 11일 서울 송파구에 위치한 호텔 파크하비오 그랜드볼룸에서 이틀간 연이어 성대하게 개최되며 대한민국 대표 모델 대회로서의 위상을 다시 한번 입증했다. 인터내셔널 슈퍼퀸 모델협회(회장 김대한, 대표 박은숙)가 주최·주관한 두 행사는 화려한 드레스 무대와 전통 한복 런웨이를 하나의 브랜드 가치로 아우른 대규모 모델 페스티벌로, 모델을 꿈꾸는 이들에게 ‘반드시 도전하고 싶은 무대’로 평가받는 슈퍼퀸의 저력을 여실히 보여줬다. 두 대회 모두

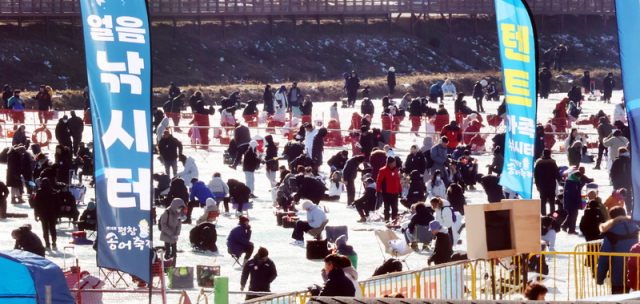

겨울 필드의 대안, 골퍼들이 찾는 ‘힐링 라운드’ 평창송어축제

평창송어축제가 20주년을 맞이해 더욱 풍성한 체험과 문화 프로그램을 선보인다. 이번 축제는 2026년 1월 9일부터 2월 9일까지 강원도 평창군 진부면 오대천 일원에서 개최된다. 2007년 시작된 평창송어축제는 2006년 수해로 침체된 지역 경제를 회복하기 위해 주민들이 자발적으로 만든 겨울 축제로, 민간 주도의 성공적인 사례로 평가받고 있다. 2025년 축제의 경제적 파급효과는 약 931억 원에 달했고, 축제 기간 6000여 개의 일자리가 창출된 것으로 나타났다. 올해 축제는 ‘겨울이 더 즐거운 송어 나라, 평창’이라는 슬로건 아

겨울 필드의 대안, 골퍼들이 찾는 ‘힐링 라운드’ 평창송어축제

평창송어축제가 20주년을 맞이해 더욱 풍성한 체험과 문화 프로그램을 선보인다. 이번 축제는 2026년 1월 9일부터 2월 9일까지 강원도 평창군 진부면 오대천 일원에서 개최된다. 2007년 시작된 평창송어축제는 2006년 수해로 침체된 지역 경제를 회복하기 위해 주민들이 자발적으로 만든 겨울 축제로, 민간 주도의 성공적인 사례로 평가받고 있다. 2025년 축제의 경제적 파급효과는 약 931억 원에 달했고, 축제 기간 6000여 개의 일자리가 창출된 것으로 나타났다. 올해 축제는 ‘겨울이 더 즐거운 송어 나라, 평창’이라는 슬로건 아

PGA투어 큐스쿨 마치고 귀국한 옥태훈 ‘국내 최강’에서 ‘세계 무대 경쟁자’로

PGA투어 큐스쿨 마치고 귀국한 옥태훈 ‘국내 최강’에서 ‘세계 무대 경쟁자’로

메이저 5승 챔피언 브룩스 켑카, PGA 투어 복귀 시동 美 ESPN 보도

메이저 5승 챔피언 브룩스 켑카, PGA 투어 복귀 시동 美 ESPN 보도

루키의 계보는 계속된다… ‘챌린지 1위’ 양희준, KPGA 투어에서 새 역사에 도전

루키의 계보는 계속된다… ‘챌린지 1위’ 양희준, KPGA 투어에서 새 역사에 도전

일출 핫플 하동케이블카, 새해 특별 프로모션

일출 핫플 하동케이블카, 새해 특별 프로모션

“PGA의 또 다른 관문, KPGA 투어”...미국인 브랜든 케왈라마니

“PGA의 또 다른 관문, KPGA 투어”...미국인 브랜든 케왈라마니

관악구, 2년 연속 종합청렴도 우수…`인공지능(AI), 세대공감` 청렴 키워드 집중

관악구, 2년 연속 종합청렴도 우수…`인공지능(AI), 세대공감` 청렴 키워드 집중

목록

목록